During our ongoing research on the security of cloud service providers and cloud based applications, we performed a regular audit of our AWS account password. Thinking of popular incidents and evergreens in attack vectors, we were wondering which consequences an online bruteforce attack on our AWS password would have. So we decided to perform a bruteforce attack against our own account. Analyzing the login process of AWS, the following requirements for the bruteforce tool to be used could be derived:

- Cookie Handling

- HTTPS support

- HTTP 3xx support.

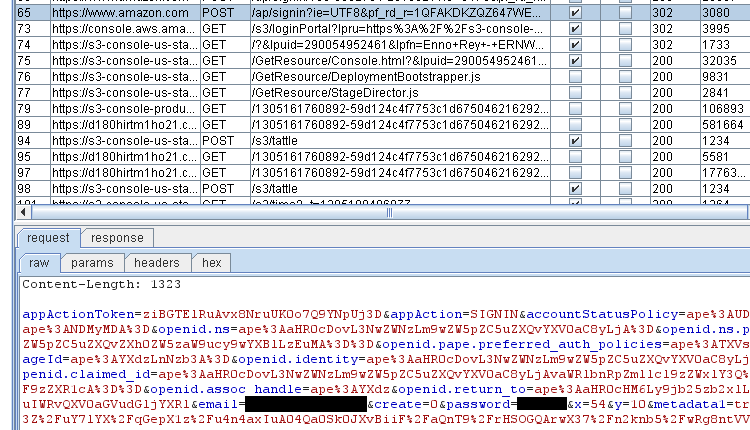

It turned out that it was pretty hard to find a password testing tool which fulfilled these requirements and would be able to actually handle the complex AWS login process — eventually there was none. Since we use and like Burp Suite pretty much, the Intruder suggested itself as an alternative which is straight forward to configure even though it might lack the speed and efficiency of special bruteforcing tools. Using burp’s history, we were able to identify the request which triggered the login process:

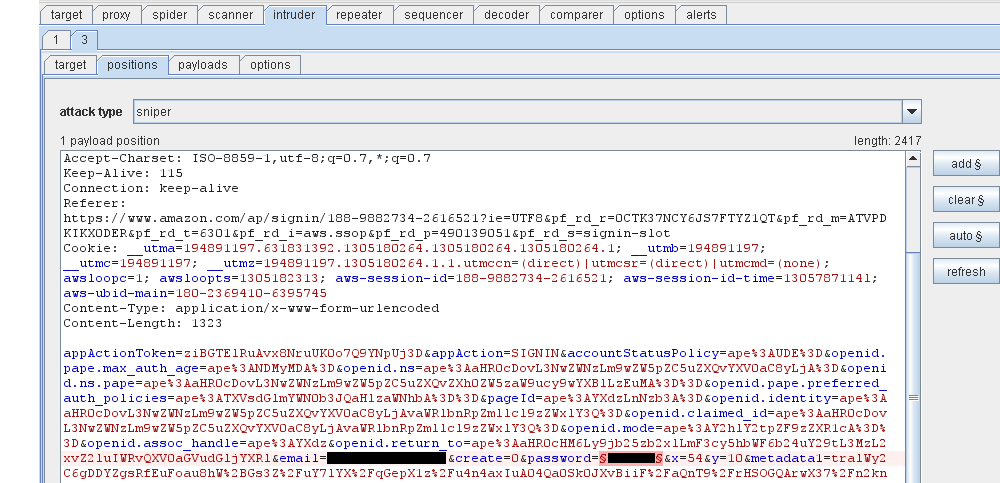

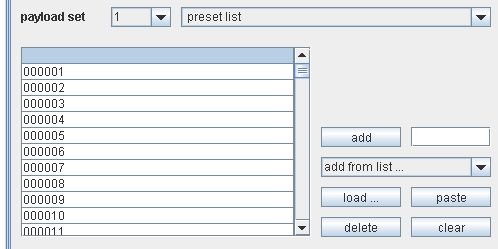

After the request is sent to the Intruder, the password field is marked

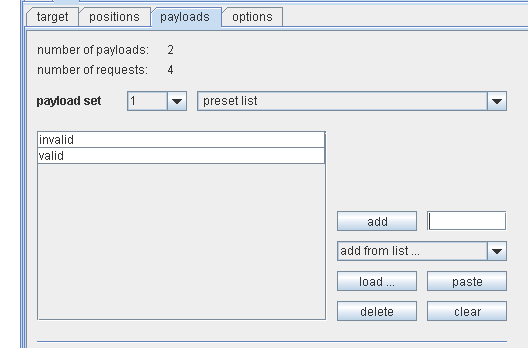

and the payloads to be used are configured.

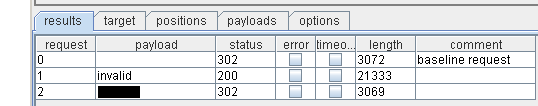

Using exemplary payloads, it is possible to identify a successful login attempt, since it results in a redirect to the authenticated area/SSO server/whatever whereas a wrong passwords results in HTTP 200 presenting the AWS login page again:

Having this basic bruteforcing process established, the wordlist to be used must be generated. To decide which complexity should be covered, the Amazon password policy must be analyzed — if the restrictions in place deserve to be called a policy. The only restriction is that the password is between 6 and 20 characters (though the upper limit was determined regarding the maxlength field parameter when changing the password using the webfrontend, since there is no documentation about this available. Thinking of business needs, this behavior might be understandable since Amazon loses “endusers” and therefore money if their password policy is too strict). So we decided to use a wordlist which contains all passwords of 6 characters consisting of numbers (which can be generated pretty easy reactivating some old perl scripting skills: perl -le ‘printf “%06d”, $_ foreach(1..999999)’ 😉 ). Such passwords even might be pretty common when thinking of “birthday passwords”.

After performing about 400k requests, we paused the attack and searched for requests which resulted in a HTTP 302 response, just as the baseline request did.

And indeed, it was possible to bruteforce the password — which is not such a big surprise though. The bigger — and worse — surprise is, that it was still possible to login to our amazon account after performing about 2 million requests (including some dry runs) within two days originating from one single IP adress without having the account locked, being throttled down or notified in any way. And we were performing about 80k requests per hour.

Coming back to the title of the blogpost: At the moment of our investigation, there were no protection mechanisms against bruteforce attacks for the key to your datacenter — which your AWS credentials actually can be, if you are hosting a large amount of your services in EC2. Following a repsonsible disclosure policy, we contacted the AWS Security Team and got a very comprehensive answer. As we supposed, they pointed us to their MFA solution, which is basically, even though there was a major incident recently, a viable security control when authenticating users for data center access. But in addition, we had a long and beneficial dialog about potential mechanisms such as connection throttling and account locking. The outcome of our discussion is a CAPTCHA mechanism which kicks in after a brute force attempt is detected — and was also re-tested several times by our bruteforcing attempt. It was quite impressive to see that it was possible for Amazon to implement additional security measures in such a short time frame, regarding the huge size and complexity of the AWS environment. So we were really glad to get in touch with the committed AWS Security Team and were really happy to see that those guys are really into security and trying to communicate with their customers.

Continue reading