This is the third (and last) part of the series (parts 1 & 2 here). We’ll provide the results from some additional tests supported by public cloud services, namely AWS (Amazon Web Services).

Lab Setup

The Amazon Elastic Compute Cloud (short: EC2) provides a flexible environment for the on demand provisioning of virtual machines of different performance levels. For our lab setup, a so-called extra large instance was used. According to Amazon, the technical specs are the following:

15 GB memory

8 EC2 Compute Units (4 virtual cores with 2 EC2 Compute Units each)

1,690 GB instance storage

64-bit platform

I/O Performance: High

API name: m1.xlarge

Since the I/O performance of single disks had turned out to be the bottleneck in the “local” setup, eight Elastic Block Storage (short: EBS) volumes were created and attached to the instance. Each EBS volume is hosted within a specific availability zone and can be attached to instances running in the same zone. EBS volumes can be created and attached issuing two commands of the amazon ec2 command line tools. Therefore the amount of storage can be scaled up very easily. The only requirement (for our tests) is the existence of a sufficient number of EBS volumes which then contain parts of the pcap file to be analyzed.

Results

During the benchmarks, the performance was significantly lower than with the setup described in the previous post, even though eight different EBS volumes were used to avoid the bottleneck of a single storage volume. The overall performance of the test was seemingly limited by the I/O performance restriction within virtualized instances and virtualized storage systems. Following the overall cloud computing paradigm, performance limitations of this kind might be circumvented by using multiple resources which do the processing in parallel. This could be done by using multiple instances or by using frameworks like Amazon MapReduce which are designed to process huge sets of data. Applying this approach to the analysis of pcap files, the structure of the pcap format carries some inherent problems. The format consists of a binary representation of the data which is structured by the time of the captured packets and not by logical packet traces. Therefore it would be necessary to process the complete pcap file by each instance to extract all streams to identify which streams of the file are to be analyzed by the concrete worker instance. This prevents an efficient distribution of the analysis in multiple jobs or input files. If the captured network data would be stored in separate streams instead of one big pcap file, the processing using a map/reduce algorithm would be possible and thus potentially increase scalability significantly.

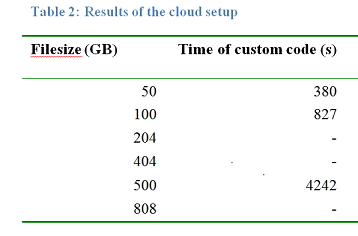

That said, finally here are the results of our testing (test methodology described in earlier post):

So it took much longer to extract the data from a 500 GB file which can be attributed to the increased latency times accessing centralized storage (from a SAN/over the network) when compared to locally connected SSDs.

Hopefully this little series provided some insight for you, dear readers. We’ll publish the full technical report as an ERNW Newsletter in the next months.

Have a good one, thanks

Enno

https://labs.ripe.net/Members/wnagele/large-scale-pcap-data-analysis-using-apache-hadoop

kc,

thanks for your hint. The big problem with this approach (which is also mentioned in both our post and the release notes quoted by you) is that you need multiple PCAP files to spread over the different Map-Reduce instances. As PCAP files are not organized in a “strictly sequential” way, and our project hat the presumption of one large PCAP file, this approach does not improve the performance when you have to split up the PCAP file beforehands.

Thanks & have a nice one,

Matthias